Rikard Herlitz: how purpose and capability build true AI innovation

In the current AI gold rush, it’s easy to mistake a shiny wrapper for the real thing.

Many of today’s “AI-first” companies are building clever tools on top of someone else’s foundation models. They’re masters of the interface, but they don’t build the engine.

At Epidemic Sound, we’ve taken a different approach.

While we’re known for music, we’ve quietly been building one of the most advanced audio AI engines in the world. It’s the unseen layer that makes our simple, intuitive tools possible – and it’s powered by one thing: purpose with engineering depth.

The why: building for a win-win future

Purpose gives direction. Engineering gives momentum. In AI, one without the other isn’t enough.

Our purpose has always been clear: to simplify soundtracking for creators and help artists thrive. This belief has guided every decision since our founding – from pioneering our PRO-free model to building technology that creates win-win outcomes for both artists and creators.

That clarity drives how we innovate. We don’t build tech for its own sake; we build what’s needed to make our ecosystem fairer, safer, and more empowering for everyone who creates. (As my colleague Sam Hall explore here, purpose is most powerful when it drives mutual benefit.)

And in the age of AI, that purpose remains our north star. It pushes us to create tools that extend human creativity – not replace it.

The how: building the engine, not the interface

Purpose without capability is just a slogan. To truly innovate, you have to build.

That’s why, instead of layering features over someone else’s models, we’ve invested in developing our own:

- Music foundation models that understand the DNA of sound.

- Controlnets that allow nuanced creative direction.

- Audio embedding systems that power intelligent search and recommendation.

- Advanced fingerprinting technology that protects rights and ensures commercial safety.

These are not off-the-shelf systems – they’re built to address the complex world of music and soundtracking.

They power the features creators already know and love – from semantic search and audio similarity to stem-separated adaptation and dynamic intensity controls.

Each capability reflects our philosophy: technology should amplify human creativity, not automate it away.

The what: from sound to feeling

Our advantage isn’t just in what we build, but what we build it on.

Because we own all rights to our catalog – and it’s PRO-free – we can train our models on our entire, high-quality dataset safely and ethically. This gives us one of the most capable and legally robust training grounds in the industry.

And that’s only half the story. Our tracks are played more than three billion times every day across social media and content platforms. That gives us something no one else has: contextual intelligence.

It’s the difference between knowing the BPM and knowing the vibe.

Each play teaches our models how humans use music to tell stories. We don’t just understand what a track sounds like – we understand what it feels like in context.

That emotional and narrative understanding is what enables truly human-first AI – AI that feels, not just functions.

Opening the engine

For years, our AI engine powered our own products – our website, apps, and integrations for video editors. Those platforms remain at our core, but we’re now opening the engine itself.

Through our Model Context Protocol (MCP) interface, we’re making our technology accessible through a universal, developer-friendly interface.

MCP allows anyone – from emerging “vibecoders” experimenting with conversational AI to enterprises building intelligent workflows – to integrate context-aware, rights-safe soundtracking directly into their systems.

We’re building for the coming age of agentics – when autonomous AI agents can execute entire creative projects end-to-end.

In that world, Epidemic Sound provides the definitive soundtracking component – an AI that understands intent, emotion, and context rather than seeking to replace it.

The outcome: where purpose meets innovation

The work we’ve been doing quietly in the background is now stepping into the light.

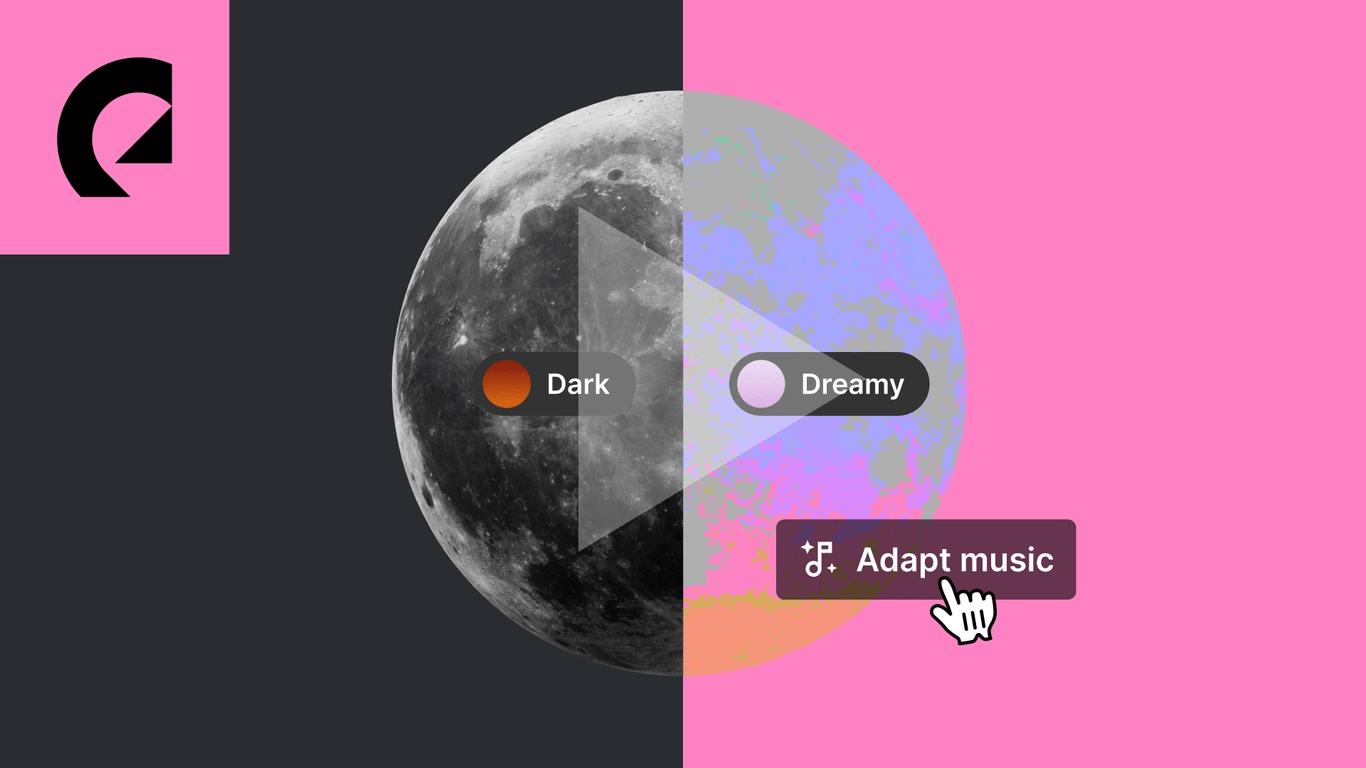

Our recent releases, from Adapt and Assistant to our visionary new Studio, mark the next step in our mission to soundtrack the world.

The story of Epidemic Sound has always been one of building – building models, systems, and above all, trust.

AI is undeniably already an integrated part of the creative process. Our goal is to continue to pair purpose with capability – using AI not to replace creativity, but to help people express it in ways we’ve never heard before.

About Rikard Herlitz

Rikard Herlitz is Chief Technology Officer at Epidemic Sound, joining in January 2023 from Google Meet where he was Engineering Director. Rikard previously held the position of Chief Technology Officer at Mojang Studios, specifically focusing on the development of Minecraft. He has also founded companies like Goo Technologies and Ardor Labs, where he built products used by companies such as Amazon and NASA.